One of the things I've written more commonly about here is the mathematics of ballistics and ranged combat. [Here are two of the most significant ones:

link1,

link2. Do a search, and you'll find a lot more.]

Then, in my last update to the OED house rules [

link], one of the edits I made was to change the penalty for range from a short/medium/long categorization to a flat −1 per 10 feet shot. The model that I was using previously followed the standard D&D three-step range categorization, but altered the modifiers in question, based on UK long-distance clout shooting tournaments and a computer-simulated model (per links above, and simulator software on

GitHub). But in practice (based on my regular campaign game this year) that seemed very weird. While the real-world-accurate model seems like it should be quadratic, what I realized was that a linear approximation is "good enough" in the close ranges where it matters. If the penalty at 480 feet turns out to be −48 when it should really be −32, that is, of course, entirely academic and won't make any practical difference in-game.

Example: Recently my PCs were traveling up a mountainous stairway ridge while under fire from a group of goblins. The PCs were crawling on the stairs to minimize their chance of being knocked off, while attempting to return fire. But the PCs could crawl for a few rounds with no change to their shot chances, and then suddenly in a certain round, the threshold to the next category would be reached, and the penalty suddenly collapsed. The players were somewhat nonplussed by this, and I think reasonably so. Hence the new rule which is both brain-dead simple to compute mentally and makes for a smooth, continuous gradation as opponents close with each other.

In summary: As of my most recent house rules edit, based on both real-world research and in-game testing, we have: −1/10 feet distance to ranged shots. Also I specify a 60 feet maximum range to thrown weapons. (Note that splits the difference between the 3" range in Chainmail [30 yards] and the 3" seen in D&D [ostensibly 30 feet].)

Since I posted that, most enticingly, an new academic paper of interest has been published: Milks, Annemieke, David Parker, and Matt Pope.

"External ballistics of Pleistocene hand-thrown spears: experimental performance data and implications for human evolution." Scientific Reports 9.1 (2019): 820 [

link]. The background starts with some academic debate about the distance at which primitive spear throwing cultures could hunt prey: Many argue only 5-10 meters? Perhaps 15-20 meters? Some reports assert 50 meters?

What the authors do here is put the issue to a field test. First, they made replicas of 300,000 year-old wooden spears, presumably used by Neanderthals, as found at the

Schöningen archaeological site (as shown to right). Then they found a half-dozen trained javelin athletes, set up hay-bale targets at various distances in a field, and had them throw a total of 120 shots (e.g., see picture at top). These shots were captured with high-speed cameras from which they could procure data on accuracy, speed, kinetic energy on impact, etc. From this it seems clear that the impacts could kill prey on a hit.

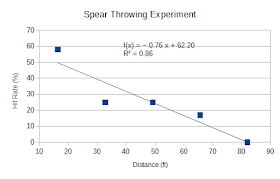

For my purposes, I'm mostly interested in the hit success rate. Here's the chart presented by the authors, followed by my recreation and regression on it.

Conclusions: For this data, we see that a linear regression on the chance to score a hit is indeed a pretty good model (it accounts for R² = 86% of any variation).

Moreover, the chance to hit drops about 0.76% per foot, that is, 7.6% per 10 feet. That's close enough to 5%, i.e., −1 in 20 per 10 feet, for game purposes, I think -- our current OED rule. And the maximum distance at which any hits were scored is 20 meters, that is, basically 60 feet, also the same as the OED rule. That's gratifying. (The author's main conclusion is that the academic consensus for useful hunting range should be revised upward to at least 15-20 meters distance.)

A few side points: Note that the 60 feet maximum to score a hit on bale-sized target is very different from the maximum distance throwable with the spear. The researchers also had the participants take a few throws purely for maximum distance, and these ranged from 20 meters to a bit over 30 meters (i.e., over 90 feet). Compare this to the base D&D system which fails to distinguish between the maximum bowshot and the maximum hittable bowshot, say. Interestingly: The more experienced throwers (in years) could throw longer distances, something we don't model in D&D.

Another point in that vein is that the hit rates are fairly low. In D&D, granted 4th-level fighters, an unarmored AC 9 target, and say +6 for being motionless/helpless as well, with −1 for 15 feet distance, I would expect to make a roll of d20 + 4 + 9 + 6 − 1 = d20 + 18, i.e., 95% chance to hit (compared to 58% at the first distance in the experiment). But the experiment run by the authors hobbles the throwers in at least two ways. One: "The participants in this study [were] trained in throwing but not in aiming for a target", which reflects standard javelin-throwing competitions today. So perhaps we should lower the equated fighter level in this regard. Two: Hay bales flat on the ground make for a very short target: around 1½ feet, only one-quarter the height of a man or horse, say? (As noted in my own long-distance archery field experiment with older equipment, it's easy to get shots laterally on target; the difficulty is getting the long-short distance correct;

link.) The researchers here took a few experimental shots at 10 meters with two hay bales stacked on top of each other, and the hit rate immediately jumped from 17% to 33% (i.e., doubled). So perhaps our D&D model should also include a penalty for the short target. I'll leave crunching those numbers as an exercise for the reader (they work out reasonably well).

It's always super neat to see people putting historical speculations to practical tests -- especially this one, based on a 300,000 year-old find. Major thanks to Milks, Parker, and Pope for thinking this one up!